publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- UIST

ProMemAssist: Exploring Timely Proactive Assistance Through Working Memory Modeling in Multi-Modal Wearable DevicesKevin Pu, Ting Zhang, Naveen Sendhilnathan, Sebastian Freitag, Raj Sodhi, and Tanya R. JonkerIn Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology, Busan, Republic of Korea, 2025

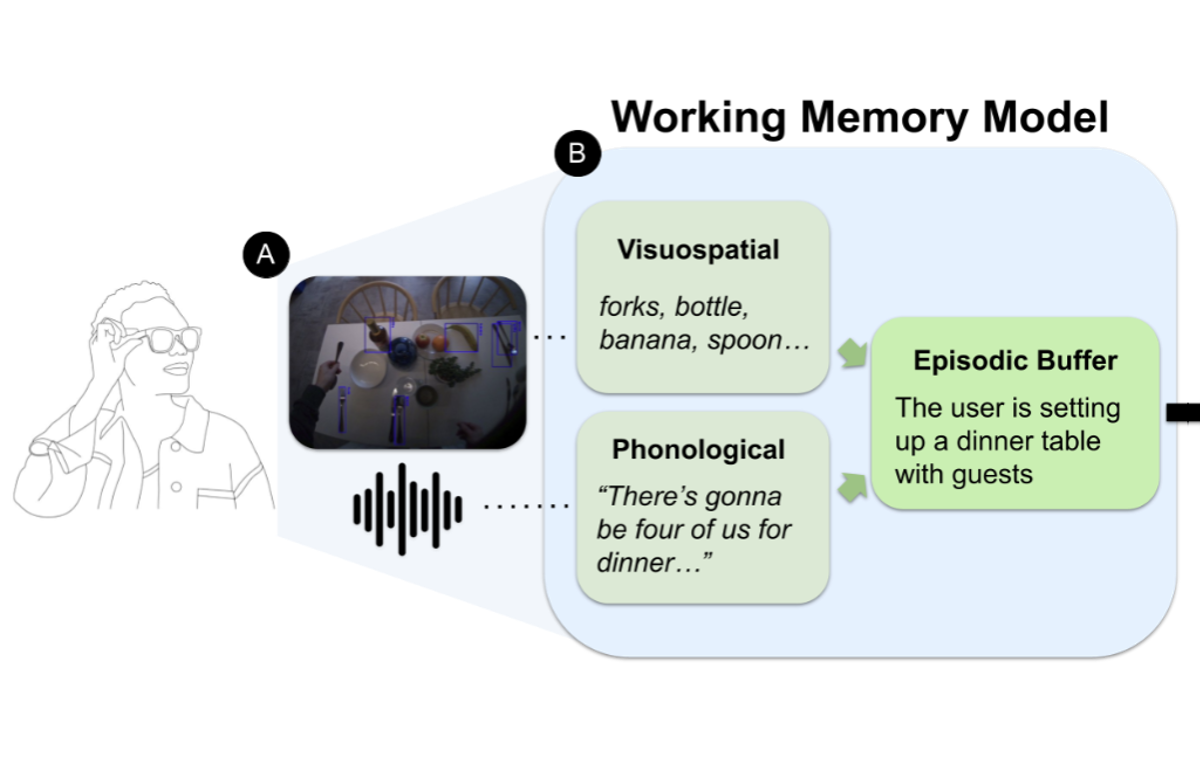

ProMemAssist: Exploring Timely Proactive Assistance Through Working Memory Modeling in Multi-Modal Wearable DevicesKevin Pu, Ting Zhang, Naveen Sendhilnathan, Sebastian Freitag, Raj Sodhi, and Tanya R. JonkerIn Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology, Busan, Republic of Korea, 2025Wearable AI systems aim to provide timely assistance in daily life, but existing approaches often rely on user initiation or predefined task knowledge, neglecting users\’current mental states. We introduce ProMemAssist, a smart glasses system that models a user’s working memory (WM) in real-time using multi-modal sensor signals. Grounded in cognitive theories of WM, our system represents perceived information as memory items and episodes with encoding mechanisms, such as displacement and interference. This WM model informs a timing predictor that balances the value of assistance with the cost of interruption. In a user study with 12 participants completing cognitively demanding tasks, ProMemAssist delivered more selective assistance and received higher engagement compared to an LLM baseline system. Qualitative feedback highlights the benefits of WM modeling for nuanced, context-sensitive support, offering design implications for more attentive and user-aware proactive agents.

@inproceedings{Pu2025ProMemAssistET, author = {Pu, Kevin and Zhang, Ting and Sendhilnathan, Naveen and Freitag, Sebastian and Sodhi, Raj and Jonker, Tanya R.}, title = {ProMemAssist: Exploring Timely Proactive Assistance Through Working Memory Modeling in Multi-Modal Wearable Devices}, year = {2025}, isbn = {9798400720376}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3746059.3747770}, doi = {10.1145/3746059.3747770}, booktitle = {Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology}, articleno = {56}, numpages = {19}, keywords = {Proactive Assistance; User Modeling; Human-AI Interaction}, location = {Busan, Republic of Korea}, series = {UIST '25}, } - UIST

StoryEnsemble: Enabling Dynamic Exploration & Iteration in the Design Process with AI and Forward-Backward PropagationSangho Suh, Michael Lai, Kevin Pu, Steven P. Dow, and Tovi GrossmanIn Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology, Busan, Republic of Korea, 2025

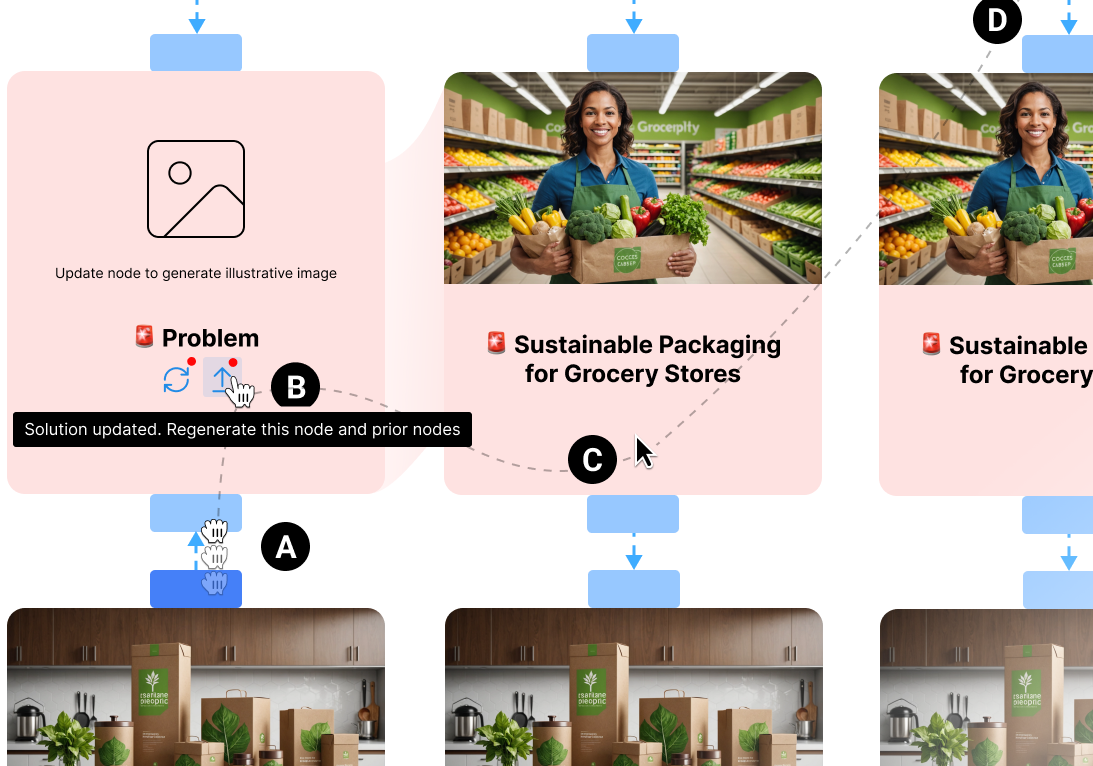

StoryEnsemble: Enabling Dynamic Exploration & Iteration in the Design Process with AI and Forward-Backward PropagationSangho Suh, Michael Lai, Kevin Pu, Steven P. Dow, and Tovi GrossmanIn Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology, Busan, Republic of Korea, 2025Design processes involve exploration, iteration, and movement across interconnected stages such as persona creation, problem framing, solution ideation, and prototyping. However, time and resource constraints often hinder designers from exploring broadly, collecting feedback, and revisiting earlier assumptions—making it difficult to uphold core design principles in practice. To better understand these challenges, we conducted a formative study with 15 participants—comprised of UX practitioners, students, and instructors. Based on the findings, we developed StoryEnsemble, a tool that integrates AI into a node-link interface and leverages forward and backward propagation to support dynamic exploration and iteration across the design process. A user study with 10 participants showed that StoryEnsemble enables rapid, multi-directional iteration and flexible navigation across design stages. This work advances our understanding of how AI can foster more iterative design practices by introducing novel interactions that make exploration and iteration more fluid, accessible, and engaging.

@inproceedings{Suh2025StoryEnsembleED, author = {Suh, Sangho and Lai, Michael and Pu, Kevin and Dow, Steven P. and Grossman, Tovi}, title = {StoryEnsemble: Enabling Dynamic Exploration \& Iteration in the Design Process with AI and Forward-Backward Propagation}, year = {2025}, isbn = {9798400720376}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3746059.3747772}, doi = {10.1145/3746059.3747772}, booktitle = {Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology}, articleno = {203}, numpages = {36}, keywords = {Design frameworks; design thinking; Double Diamond; scenario-based design; forward-backward propagation; backpropagation; human-AI interaction}, location = {Busan, Republic of Korea}, series = {UIST '25}, } - CHI

Assistance or Disruption? Exploring and Evaluating the Design and Trade-offs of Proactive AI Programming SupportKevin Pu, Daniel Lazaro, Ian Arawjo, Haijun Xia, Ziang Xiao, Tovi Grossman, and Yan ChenIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 2025

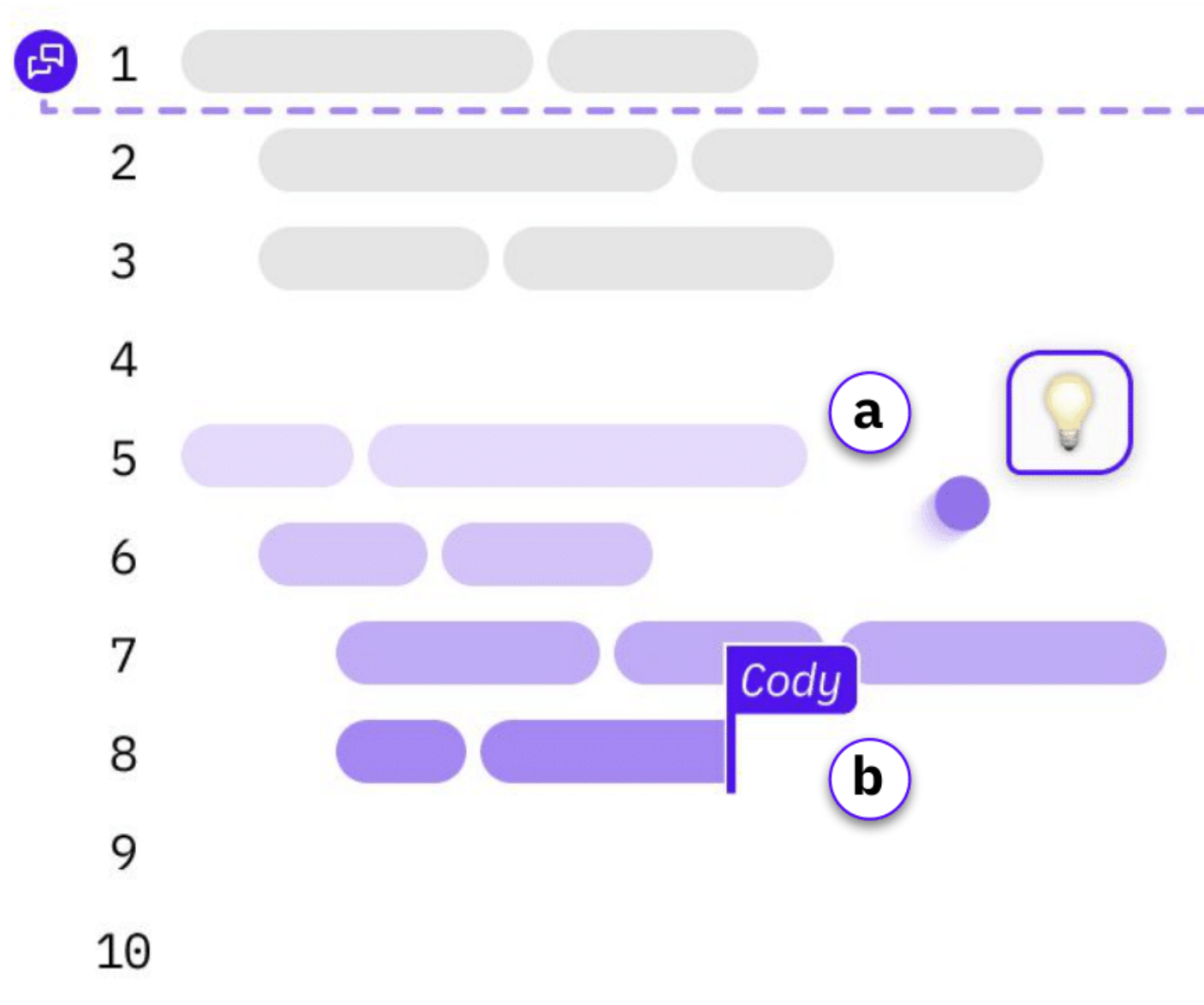

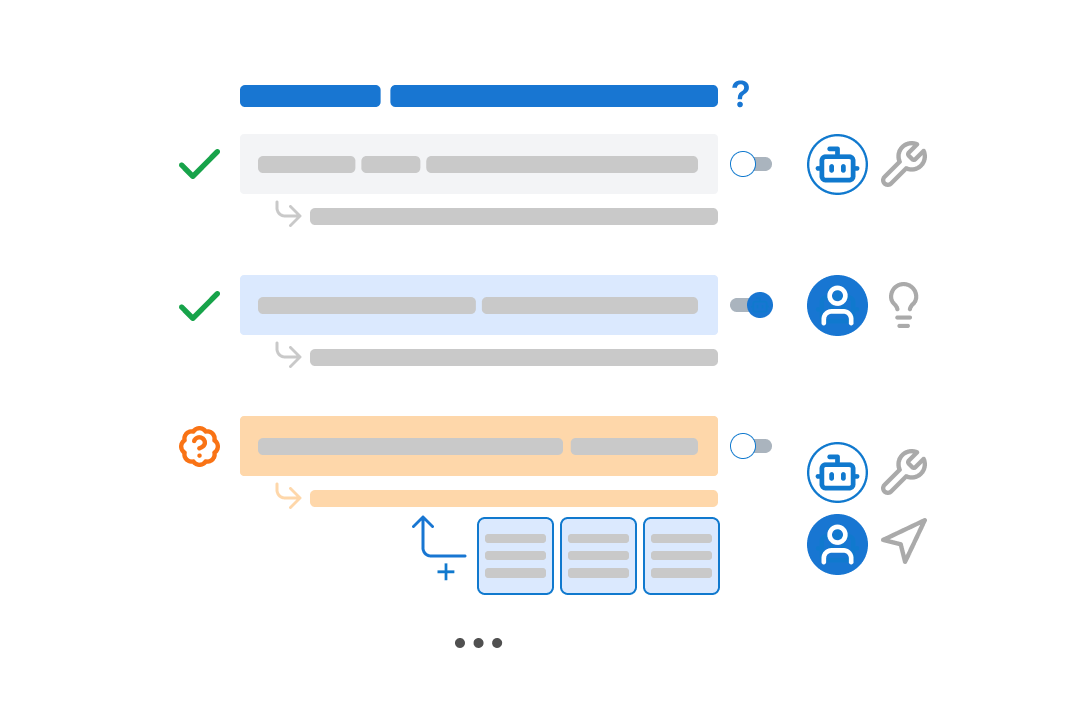

Assistance or Disruption? Exploring and Evaluating the Design and Trade-offs of Proactive AI Programming SupportKevin Pu, Daniel Lazaro, Ian Arawjo, Haijun Xia, Ziang Xiao, Tovi Grossman, and Yan ChenIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 2025AI programming tools enable powerful code generation, and recent prototypes attempt to reduce user effort with proactive AI agents, but their impact on programming workflows remains unexplored. We introduce and evaluate Codellaborator, a design probe LLM agent that initiates programming assistance based on editor activities and task context. We explored three interface variants to assess trade-offs between increasingly salient AI support: prompt-only, proactive agent, and proactive agent with presence and context (Codellaborator). In a within-subject study (N = 18), we find that proactive agents increase efficiency compared to prompt-only paradigm, but also incur workflow disruptions. However, presence indicators and interaction context support alleviated disruptions and improved users’ awareness of AI processes. We underscore trade-offs of Codellaborator on user control, ownership, and code understanding, emphasizing the need to adapt proactivity to programming processes. Our research contributes to the design exploration and evaluation of proactive AI systems, presenting design implications on AI-integrated programming workflow.

@inproceedings{Pu2025AssistanceOD, author = {Pu, Kevin and Lazaro, Daniel and Arawjo, Ian and Xia, Haijun and Xiao, Ziang and Grossman, Tovi and Chen, Yan}, title = {Assistance or Disruption? Exploring and Evaluating the Design and Trade-offs of Proactive AI Programming Support}, year = {2025}, isbn = {9798400713941}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3706598.3713357}, doi = {10.1145/3706598.3713357}, booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}, articleno = {152}, numpages = {21}, keywords = {Proactive AI; Programming Assistance; Human-AI Interaction}, location = {Yokohama, Japan}, series = {CHI '25}, } - CHI

IdeaSynth: Iterative Research Idea Development Through Evolving and Composing Idea Facets with Literature-Grounded FeedbackKevin Pu , K. J. Kevin Feng, Tovi Grossman, Tom Hope, Bhavana Dalvi Mishra, Matt Latzke, Jonathan Bragg, Joseph Chee Chang, and Pao SiangliulueIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 2025

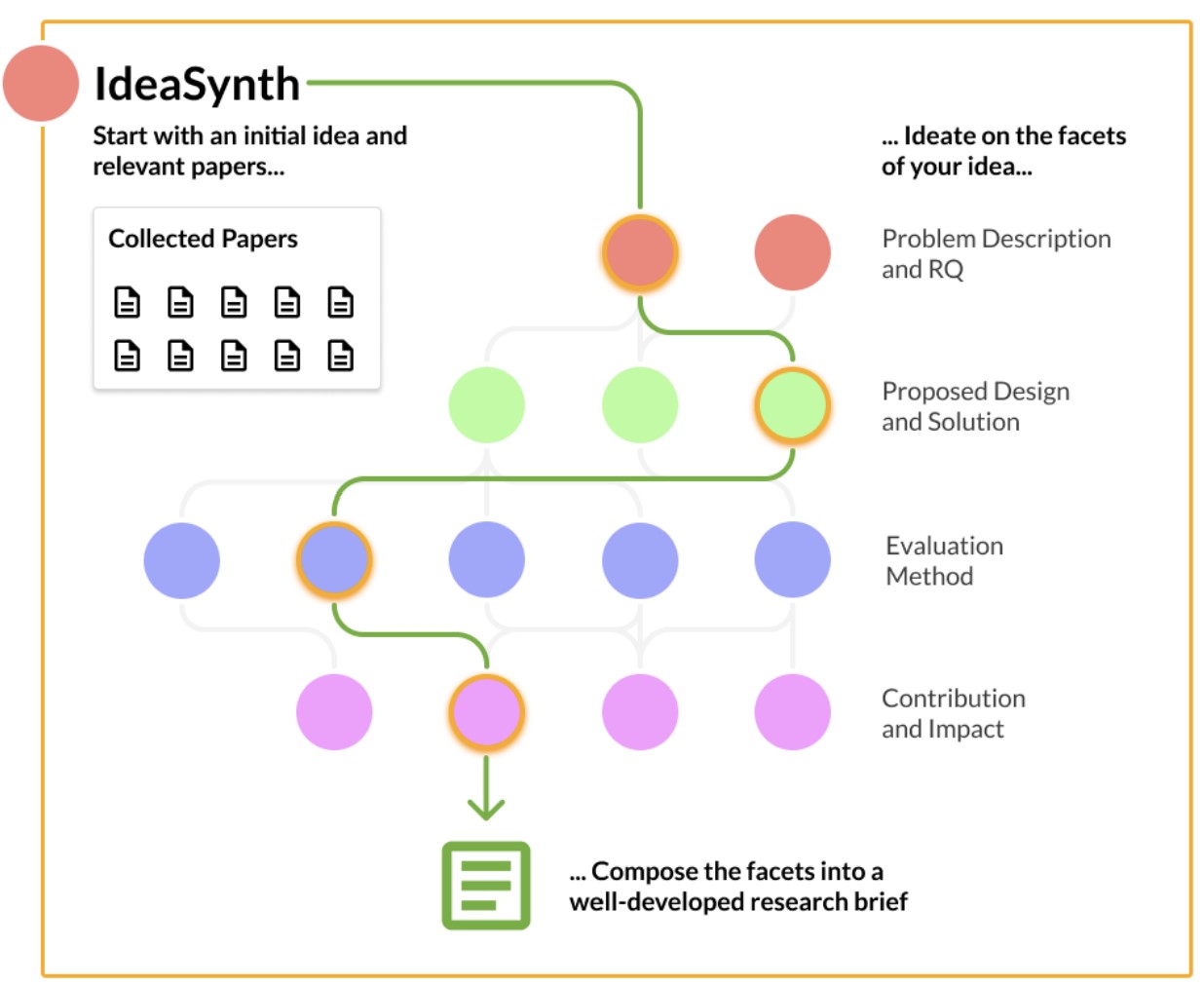

IdeaSynth: Iterative Research Idea Development Through Evolving and Composing Idea Facets with Literature-Grounded FeedbackKevin Pu , K. J. Kevin Feng, Tovi Grossman, Tom Hope, Bhavana Dalvi Mishra, Matt Latzke, Jonathan Bragg, Joseph Chee Chang, and Pao SiangliulueIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 2025Research ideation involves broad exploring and deep refining ideas. Both require deep engagement with literature. Existing tools focus primarily on broad idea generation, yet offer little support for iterative specification, refinement, and evaluation needed to further develop initial ideas. To bridge this gap, we introduce IdeaSynth, a research idea development system that uses LLMs to provide literature-grounded feedback for articulating research problems, solutions, evaluations, and contributions. IdeaSynth represents these idea facets as nodes on a canvas, and allow researchers to iteratively refine them by creating and exploring variations and combinations. Our lab study (N = 20) showed that participants, while using IdeaSynth, explored more alternative ideas and expanded initial ideas with more details compared to a strong LLM-based baseline. Our deployment study (N = 7) demonstrated that participants effectively used IdeaSynth for real-world research projects at various ideation stages from developing initial ideas to revising framings of mature manuscripts, highlighting the possibilities to adopt IdeaSynth in researcher’s workflows.

@inproceedings{pu2024ideasynth, author = {Pu, Kevin and Feng, K. J. Kevin and Grossman, Tovi and Hope, Tom and Dalvi Mishra, Bhavana and Latzke, Matt and Bragg, Jonathan and Chang, Joseph Chee and Siangliulue, Pao}, title = {IdeaSynth: Iterative Research Idea Development Through Evolving and Composing Idea Facets with Literature-Grounded Feedback}, year = {2025}, isbn = {9798400713941}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3706598.3714057}, doi = {10.1145/3706598.3714057}, booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}, articleno = {145}, numpages = {31}, keywords = {Research Ideation; Scientific Literature; Human-AI Collaboration}, location = {Yokohama, Japan}, series = {CHI '25}, } -

Cocoa: Co-Planning and Co-Execution with AI AgentsK. J. Kevin Feng, Kevin Pu, Matt Latzke, Tal August, Pao Siangliulue, Jonathan Bragg, Daniel S. Weld, Amy X. Zhang, and Joseph Chee Chang2025

Cocoa: Co-Planning and Co-Execution with AI AgentsK. J. Kevin Feng, Kevin Pu, Matt Latzke, Tal August, Pao Siangliulue, Jonathan Bragg, Daniel S. Weld, Amy X. Zhang, and Joseph Chee Chang2025@misc{feng2025cocoacoplanningcoexecutionai, title = {Cocoa: Co-Planning and Co-Execution with AI Agents}, author = {Feng, K. J. Kevin and Pu, Kevin and Latzke, Matt and August, Tal and Siangliulue, Pao and Bragg, Jonathan and Weld, Daniel S. and Zhang, Amy X. and Chang, Joseph Chee}, year = {2025}, eprint = {2412.10999}, archiveprefix = {arXiv}, primaryclass = {cs.HC}, url = {https://arxiv.org/abs/2412.10999}, }

2024

- UIST

VizGroup: An AI-assisted Event-driven System for Collaborative Programming Learning AnalyticsXiaohang Tang, Sam Wong, Kevin Pu , Xi Chen, Yalong Yang, and Yan ChenIn Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology, Pittsburgh, PA, USA, 2024

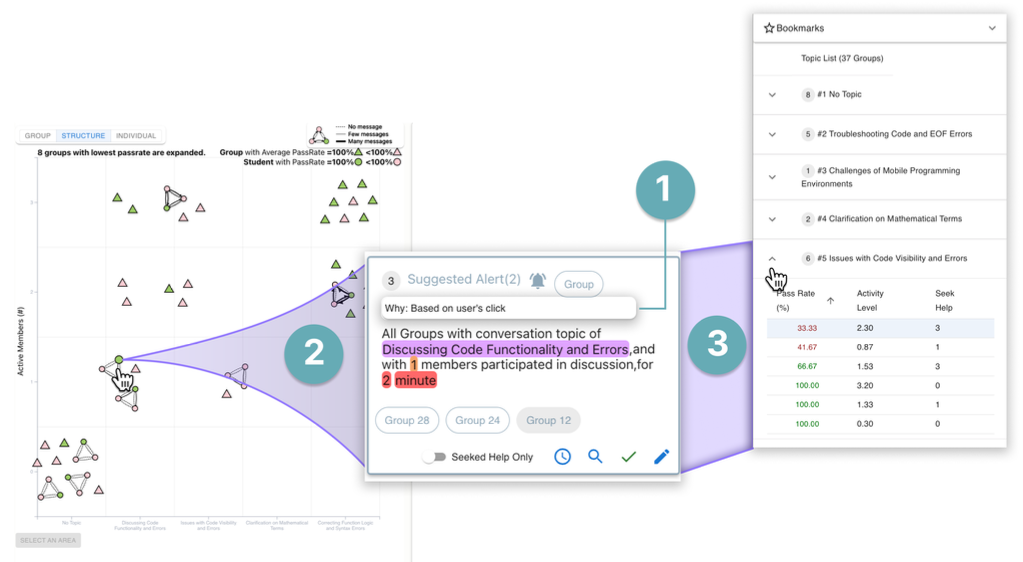

VizGroup: An AI-assisted Event-driven System for Collaborative Programming Learning AnalyticsXiaohang Tang, Sam Wong, Kevin Pu , Xi Chen, Yalong Yang, and Yan ChenIn Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology, Pittsburgh, PA, USA, 2024Programming instructors often conduct collaborative learning activities, like Peer Instruction, to foster a deeper understanding in students and enhance their engagement with learning. These activities, however, may not always yield productive outcomes due to the diversity of student mental models and their ineffective collaboration. In this work, we introduce VizGroup, an AI-assisted system that enables programming instructors to easily oversee students’ real-time collaborative learning behaviors during large programming courses. VizGroup leverages Large Language Models (LLMs) to recommend event specifications for instructors so that they can simultaneously track and receive alerts about key correlation patterns between various collaboration metrics and ongoing coding tasks. We evaluated VizGroup with 12 instructors in a comparison study using a dataset collected from a Peer Instruction activity that was conducted in a large programming lecture. The results showed that VizGroup helped instructors effectively overview, narrow down, and track nuances throughout students’ behaviors.

@inproceedings{10.1145/3654777.3676347, author = {Tang, Xiaohang and Wong, Sam and Pu, Kevin and Chen, Xi and Yang, Yalong and Chen, Yan}, title = {VizGroup: An AI-assisted Event-driven System for Collaborative Programming Learning Analytics}, year = {2024}, isbn = {9798400706288}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3654777.3676347}, doi = {10.1145/3654777.3676347}, booktitle = {Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology}, articleno = {93}, numpages = {22}, keywords = {Collaborative Learning, Programming Education}, location = {Pittsburgh, PA, USA}, series = {UIST '24}, } - CHI

Behind the Pup-ularity Curtain: Understanding the Motivations, Challenges, and Work Performed in Creating and Managing Pet Influencer AccountsSuhyeon Yoo, Kevin Pu, and Khai N. TruongIn Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 2024

Behind the Pup-ularity Curtain: Understanding the Motivations, Challenges, and Work Performed in Creating and Managing Pet Influencer AccountsSuhyeon Yoo, Kevin Pu, and Khai N. TruongIn Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 2024Creating dedicated accounts to post users’ pet content is a growing trend on Instagram. While these account owners derive joy from this pursuit, they may also struggle with criticisms and challenges. Yet, there remains a knowledge gap on how pet account owners manage their pets’ online presence and navigate these obstacles successfully. Drawing from interviews with 21 Instagram pet account owners, we uncover the motivations behind pet account creation, spanning personal, altruistic, and commercial goals. We learn about the strategies employed for crafting their pets’ online identities and personas, as well as the challenges faced by both owners and their pets in navigating the complexities of digital identity management. We discuss the evolving dynamics between humans and their pets, positioning pet identity cultivation as a form of collaborative work, akin to the “third shift”, highlighting the need to design interfaces that support this unique identity management process.

@inproceedings{10.1145/3613904.3642367, author = {Yoo, Suhyeon and Pu, Kevin and Truong, Khai N.}, title = {Behind the Pup-ularity Curtain: Understanding the Motivations, Challenges, and Work Performed in Creating and Managing Pet Influencer Accounts}, year = {2024}, isbn = {9798400703300}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3613904.3642367}, doi = {10.1145/3613904.3642367}, booktitle = {Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems}, articleno = {736}, numpages = {17}, keywords = {Instagram, human-animal interaction, pet influencers, social media}, location = {Honolulu, HI, USA}, series = {CHI '24}, }

2023

- UIST

DiLogics: Creating Web Automation Programs with Diverse LogicsKevin Pu, Jim Yang, Angel Yuan, Minyi Ma, Rui Dong, Xinyu Wang, Yan Chen, and Tovi GrossmanIn Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, San Francisco, CA, USA, 2023

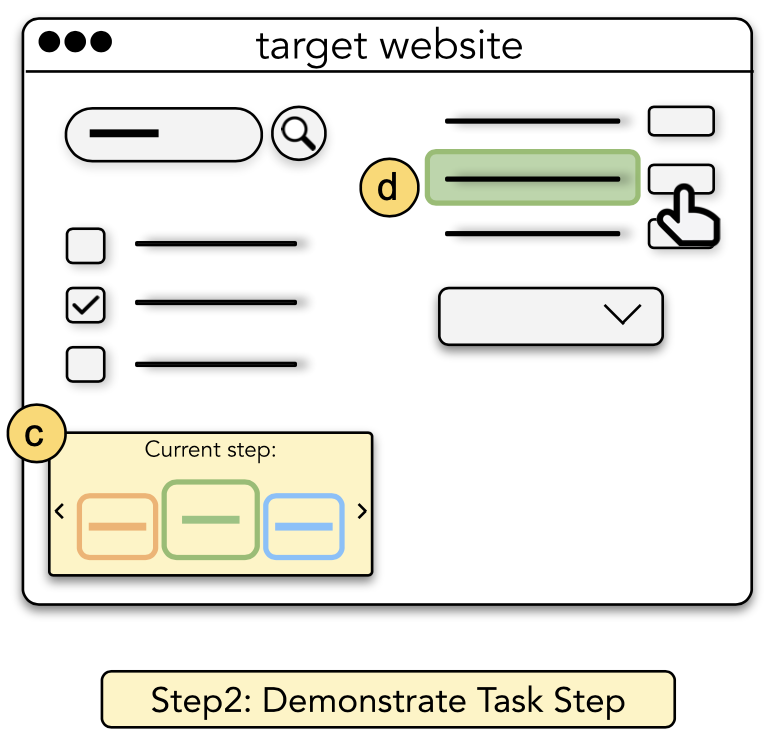

DiLogics: Creating Web Automation Programs with Diverse LogicsKevin Pu, Jim Yang, Angel Yuan, Minyi Ma, Rui Dong, Xinyu Wang, Yan Chen, and Tovi GrossmanIn Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, San Francisco, CA, USA, 2023Knowledge workers frequently encounter repetitive web data entry tasks, like updating records or placing orders. Web automation increases productivity, but translating tasks to web actions accurately and extending to new specifications is challenging. Existing tools can automate tasks that perform the same logical trace of UI actions (e.g., input text in each field in order), but do not support tasks requiring different executions based on varied input conditions. We present DiLogics, a programming-by-demonstration system that utilizes NLP to assist users in creating web automation programs that handle diverse specifications. DiLogics first semantically segments input data to structured task steps. By recording user demonstrations for each step, DiLogics generalizes the web macros to novel but semantically similar task requirements. Our evaluation showed that non-experts can effectively use DiLogics to create automation programs that fulfill diverse input instructions. DiLogics provides an efficient, intuitive, and expressive method for developing web automation programs satisfying diverse specifications.

@inproceedings{10.1145/3586183.3606822, author = {Pu, Kevin and Yang, Jim and Yuan, Angel and Ma, Minyi and Dong, Rui and Wang, Xinyu and Chen, Yan and Grossman, Tovi}, title = {DiLogics: Creating Web Automation Programs with Diverse Logics}, year = {2023}, isbn = {9798400701320}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3586183.3606822}, doi = {10.1145/3586183.3606822}, booktitle = {Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology}, articleno = {74}, numpages = {15}, keywords = {PBD, Web automation, neurosymbolic programming}, location = {San Francisco, CA, USA}, series = {UIST '23}, }

2022

- UIST

SemanticOn: Specifying Content-Based Semantic Conditions for Web Automation ProgramsBest Paper Honorable MentionKevin Pu, Rainey Fu, Rui Dong, Xinyu Wang, Yan Chen, and Tovi GrossmanIn Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, Bend, OR, USA, 2022

SemanticOn: Specifying Content-Based Semantic Conditions for Web Automation ProgramsBest Paper Honorable MentionKevin Pu, Rainey Fu, Rui Dong, Xinyu Wang, Yan Chen, and Tovi GrossmanIn Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, Bend, OR, USA, 2022Best Paper Honorable Mention

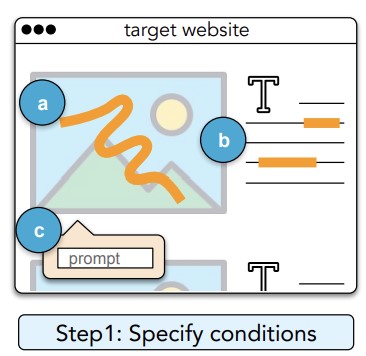

Data scientists, researchers, and clerks often create web automation programs to perform repetitive yet essential tasks, such as data scraping and data entry. However, existing web automation systems lack mechanisms for defining conditional behaviors where the system can intelligently filter candidate content based on semantic filters (e.g., extract texts based on key ideas or images based on entity relationships). We introduce SemanticOn, a system that enables users to specify, refine, and incorporate visual and textual semantic conditions in web automation programs via two methods: natural language description via prompts or information highlighting. Users can coordinate with SemanticOn to refine the conditions as the program continuously executes or reclaim manual control to repair errors. In a user study, participants completed a series of conditional web automation tasks. They reported that SemanticOn helped them effectively express and refine their semantic intent by utilizing visual and textual conditions.

@inproceedings{10.1145/3526113.3545691, author = {Pu, Kevin and Fu, Rainey and Dong, Rui and Wang, Xinyu and Chen, Yan and Grossman, Tovi}, title = {SemanticOn: Specifying Content-Based Semantic Conditions for Web Automation Programs}, year = {2022}, isbn = {9781450393201}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3526113.3545691}, doi = {10.1145/3526113.3545691}, booktitle = {Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology}, articleno = {63}, numpages = {16}, keywords = {user intent, semantics, Web automation, PBD}, location = {Bend, OR, USA}, series = {UIST '22}, }